Performative Belief: When You Adopt Opinions to Prove Membership

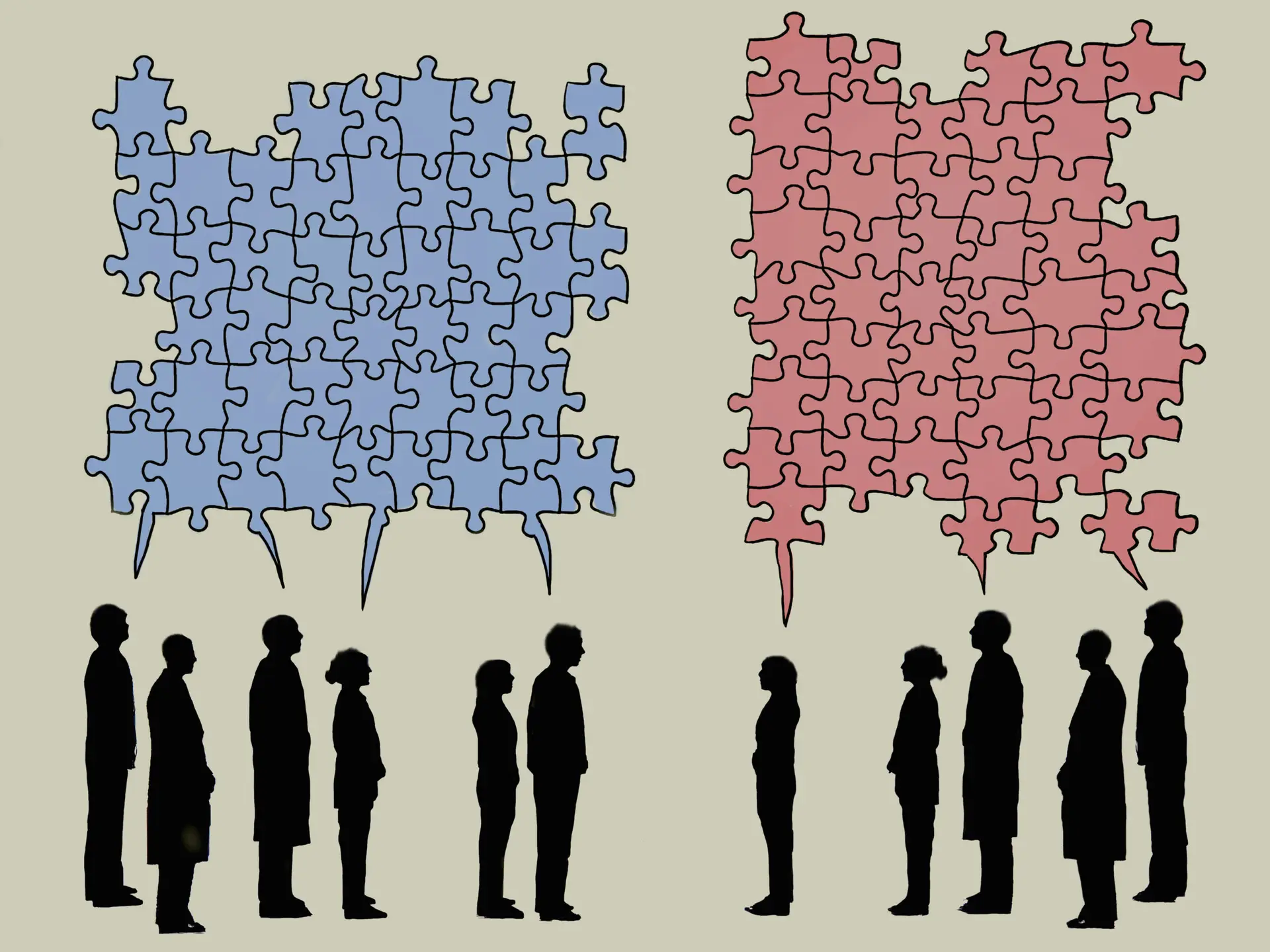

The Opinions You Wear Like a Uniform

Tell me what you believe about climate change, immigration, gun control, corporate power, gender ideology, or religious freedom, and I can tell you which tribe you belong to.

Not because those beliefs emerged from careful study. Not because you examined evidence and arrived at conclusions independently. But because beliefs have become tribal markers. Uniforms. Passwords that grant you access to the group.

You don’t hold these beliefs. You wear them.

And the moment you stop performing them convincingly, your tribe will notice.

This isn’t a conspiracy. It’s not manipulation. It’s simpler and more uncomfortable than that. Most people adopt opinions the way they adopt accents… automatically, unconsciously, by spending time around people who already speak that way.

The difference is that nobody pretends their accent is the result of rigorous intellectual deliberation.

But beliefs? We pretend those are ours.

The Gap Nobody Wants to Acknowledge

Here’s a question that should make you uncomfortable: How many of your political or cultural beliefs would you still hold if holding them got you exiled from your social circle?

Economist Bryan Caplan spent years studying what he calls “rational irrationality”. This is the idea that people don’t form beliefs to be correct. They form beliefs to fit in. In his book The Myth of the Rational Voter, Caplan argues that when the cost of being wrong is low (like voting), people indulge in beliefs that feel good or signal group loyalty, even when those beliefs are factually wrong.

The mechanism is simple: being right about politics doesn’t pay your rent. Being accepted by your tribe does.

So people optimize for acceptance, not accuracy.

irrational—and vote accordingly."

The result is a massive gap between what people say they believe and how they actually behave. Researchers call this “belief-behavior inconsistency,” and it shows up everywhere.

People who claim to support radical wealth redistribution don’t voluntarily donate more to charity. People who claim climate change is an existential threat still fly across the country for vacations. People who claim to oppose government overreach still demand laws that enforce their moral preferences.

This isn’t hypocrisy in the traditional sense. It’s something more fundamental: the belief was never about reality. It was about signaling.

The Social Psychology of Borrowed Convictions

Psychologist Solomon Asch’s famous conformity experiments in the 1950s revealed something disturbing about human nature. When placed in a group where everyone else gave an obviously wrong answer to a simple question, roughly 75% of participants conformed at least once.

Not because they were confused. Not because they didn’t know the right answer. Because the social cost of disagreeing felt higher than the cost of being wrong.

Fast-forward seventy years, and the mechanism hasn’t changed. It’s just scaled.

Social media has turned belief signaling into a full-time performance. Every post is a declaration. Every like is an endorsement. Every share is a loyalty oath. And the algorithm rewards those who signal loudest and most frequently.

Research from Yale psychologist Dan Kahan shows that people don’t process political information to become informed. They process it to reinforce their identity. In studies on “motivated reasoning,” Kahan found that when presented with data that contradicts their political tribe’s position, people with higher education and cognitive ability are actually more likely to reject the data.

Why? Because smarter people are better at rationalizing. They’re better at constructing defenses for beliefs they never chose in the first place.

This should terrify you. It means intelligence doesn’t protect you from tribal belief adoption. It just makes you better at defending it.

How Performative Beliefs Spread Faster Than True Ones

Here’s why tribal opinions dominate public discourse: they spread faster.

A belief that’s true but socially costly gets suppressed. A belief that’s false but tribally advantageous gets amplified. Not because of some conspiracy, but because social incentives reward signaling over truth.

Evolutionary psychologist Robert Kurzban explains in Why Everyone (Else) Is a Hypocrite that the human brain isn’t designed to have consistent beliefs. It’s designed to manage social reputation. Different parts of your brain “believe” different things depending on what’s strategically useful in a given moment.

The part of your brain that says “I care deeply about the environment” while you idle in traffic isn’t lying. It’s signaling. The part of your brain that knows you’re not actually going to change your behavior isn’t contradicting the first part. It’s just operating in a different social context.

Your brain is a public relations department, not a philosophy department.

And public relations departments don’t care about truth. They care about perception.

This is why you see beliefs spread in waves. Not because new evidence emerged. But because the social reward for holding that belief changed. A position that was fringe yesterday becomes mandatory today, not because it became more true, but because it became more useful for signaling tribal membership.

The Exhaustion Nobody Talks About

Performing your beliefs is exhausting.

You have to stay updated on what your tribe currently believes. You have to know which opinions are acceptable this week and which ones got you exiled last month. You have to monitor your language, your tone, your associations.

One slip and you’re suspect.

This is why political discourse feels so draining. It’s not intellectual engagement. It’s social policing. And everyone is both cop and criminal, constantly monitoring themselves and others for signs of deviance.

Psychologist Jonathan Haidt describes this as “moral conformity pressure,” and his research shows it’s intensifying. In surveys, Americans increasingly report self-censoring in professional and social settings, not because they lack convictions, but because the cost of expressing the wrong belief has become unbearable.

The result? People develop two sets of beliefs: public and private.

The public set is for performance. The private set is for reality.

And the gap between the two grows wider every year.

Current Examples: The Belief Du Jour

Let’s get specific. In the last five years, watch how quickly certain beliefs became tribal shibboleths:

COVID lockdowns: Early 2020, questioning lockdown efficacy got you labeled anti-science. By 2023, even progressive outlets were publishing studies showing lockdowns caused more harm than benefit. But during the performance window, the belief wasn’t about evidence. It was about tribe.

Defund the Police: Summer 2020, this became a mandatory progressive belief almost overnight. Not because crime data changed. Not because new research emerged. Because George Floyd’s death created an emotional mandate, and the tribe moved in unison. Two years later, many of the same politicians quietly refunded police departments, but during the signal window, disagreement meant exile.

Election integrity: Questioning the 2016 election results was patriotic resistance. Questioning the 2020 results was insurrection. Same behavior. Different tribes. The belief wasn’t about evidence. It was about whose team won.

Transgender athletes: Five years ago, this wasn’t a front-burner issue for most people. Now it’s a litmus test. Not because new biological research emerged, but because the tribes demanded clarity. Pick a side or get crushed in the middle.

Notice the pattern? These beliefs didn’t percolate up from individual deliberation. They cascaded down from tribal leaders and spread through social pressure.

You adopted them the way you’d adopt a team jersey. Not because you studied the franchise. Because everyone around you was already wearing it.

The Question That Breaks the Spell

Here’s the diagnostic test: What beliefs do you hold that would get you exiled from your tribe if you questioned them publicly?

If the answer is “none,” you’re lying to yourself.

Every tribe has orthodoxy. Every orthodoxy has enforcers. And every enforcer started as someone who just wanted to belong.

Now ask the harder question: How many of those beliefs did you arrive at independently, through your own research and reasoning, before your tribe told you what to think?

Be honest.

If your political beliefs align perfectly with your tribe’s talking points, you’re not thinking. You’re performing.

And performance isn’t the same as conviction.

The Trap: Mistaking Performance for Depth

The cruelest part of performative belief is that it feels like conviction. You get emotionally invested. You argue passionately. You feel outrage when someone challenges your position.

But that emotional intensity isn’t proof the belief is yours. It’s proof you’ve tied your identity to it.

Philosopher Kevin Simler, in The Elephant in the Brain, explains that humans are experts at self-deception. We don’t consciously decide to adopt beliefs for social reasons and then pretend they’re principled. We genuinely convince ourselves the performance is authentic.

This is why challenging someone’s tribal belief feels like a personal attack. You’re not just questioning their opinion. You’re threatening their membership. Their identity. Their social safety.

And humans will defend social safety far more fiercely than they’ll defend abstract truth.

The Way Out: Subtraction, Not Addition

You can’t think your way out of performative belief by adding more information. More data just gives you more ammunition to defend positions you never chose.

The way out is subtraction.

Stop performing. Stop signaling. Stop monitoring what your tribe expects you to believe this week.

Ask yourself: If I lost every social connection tomorrow, what would I actually believe?

If I had to defend this position to someone I respected who disagreed, could I do it without appealing to tribal talking points?

If this belief cost me friendships, would I still hold it?

These questions are uncomfortable because they reveal how much of your identity is borrowed. How much of your moral certainty is just crowd-sourced conformity.

But discomfort is the price of authenticity.

And authenticity is the only thing that separates conviction from performance.

What Christianity Demands (And Why It’s Dangerous to Tribes)

Christianity makes a specific and uncomfortable claim: truth exists independent of social consensus.

Not “your truth.” Not “my truth.” Truth.

And Christians are called to pursue it even when it costs them social belonging.

This is why early Christians were persecuted. Not because they believed weird things about God, but because they refused to perform the required beliefs of Roman society. They wouldn’t offer incense to the emperor. They wouldn’t participate in civic religion. They wouldn’t signal loyalty to the tribe when tribal loyalty demanded rejecting truth.

That same tension exists today.

When your tribe demands you affirm something you know is false, Christianity doesn’t give you permission to perform compliance. It demands you stand on truth, even when standing costs you everything.

This doesn’t mean Christians are always right. It means they’re supposed to care more about being aligned with reality than being accepted by the crowd.

Most don’t. Most perform just like everyone else. But the standard remains.

Conformity is performance. Transformation is costly.

renewal of your mind."

Final Thought: You Are Not Your Beliefs

Here’s the release: your beliefs are not your identity.

You can change your mind without losing yourself. You can question your tribe without becoming your enemy. You can hold positions that don’t fit neatly into left or right without being politically homeless.

But only if you’re willing to stop performing.

Stop wearing beliefs like a uniform. Stop adopting opinions to prove membership. Stop defending positions you never actually examined.

Start asking: Is this true? Or does this just feel safe?

One produces conviction. The other produces exhaustion.

And you’ve been exhausted long enough.